I’ve been using the new deep research models extensively recently. Naturally, I wanted to know which one is the best. I was going to ask a deep research model to answer this question for me. But I had a concern; would each model be biased in favor of itself? So I resolved to ask each of the top 5 most popular public deep research models to rate each of the 5, including themselves.

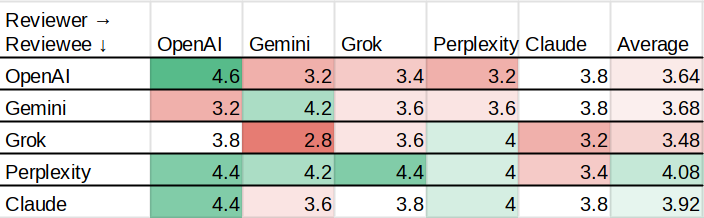

I won’t keep you in suspense; here are the results! Each row is the ratings that one model got, so Perplexity got the highest score at 4.08 out of a possible 5 points.

Scroll down to see more detailed results and commentary from each of the five contestants. I also decide which model is the most humble in its self-assessment, and which candidate wins a ranked-choice vote.

Methodology

These models have mostly solved the hallucinations problems. They cite their sources and rely on what they read in the results of a web search. So, I’ll be asking them to do research into what reviewers say about models like themselves, and compile a report based on that web research.

First, I asked each research model to look into how people use deep research models, and decide on about five criteria for judging them. Here’s the exact prompt I used:

I’m doing research on Deep Research AI agents in 2025. For my report, I want to produce a chart comparing top models. I want you to think about Deep Research agents and research what people say about them. Then, I want you to tell me what columns I should use for the chart. For each column, we’re going to rate each model on a scale of 1 to 5 for that attribute. Attributes might be “Speed” or “Cost”. Come up with 4 or 5 columns which you think will be the best for understanding the different advantages of these models.

For each column, clearly specify what is being measured. Outline what might earn 2, 3, 4, or 5 stars. Encourage tough ratings to create dynamic range. Give each column a one-word name.

Then, I asked a follow-up question telling them to produce a chart for the models I’m looking at:

I’m reviewing these models:

OpenAI Deep Research

Google Gemini Deep Research

xAI Grok-3 DeepSearch

Perplexity AI Deep Research

Claude 3.7 with Thinking and Web Search

I want you to rate each model on a scale from 1-5 for each of the columns that you identified. Use star emojis like “⭐⭐⭐⭐” for 4 stars. Be a tough rater to create dynamic range.

Add a final column called “Overall” which is a synthesis of the other columns. It can be a simply average or a weighted average if you think that’s more appropriate.

Make sure you end with a chart, containing one row for each model, and one column for each of the attributes you identified.

I told Gemini and Claude to think deeply and search the web, and for the other models I selected the “Deep Research” button in their UI.

I subsequently asked each to calculate numeric Overall scores, to get more granular results. That led to the summary chart you can see at the top.

Recalculate the Overall column, giving a numeric score out of 5.0. Calculate this as an average or weighted average of your column-wise ratings. Return a chart with two columns, one with the model name, and one with a single number between 1.0 and 5.0.

Results

Each model rated each of the other models plus itself. Each chart is different, because I asked them to make up their own columns, and then have an “Overall” score. I’m including screenshots of their assessments, but you can click into the logs if you want to see more.

Perplexity AI Deep Research (logs)

I did this exercise with Perplexity first, because it’s my personal favorite deep research model. It’s the only model that gave out a single ‘⭐’ in any category, knocking OpenAI for being too expensive. Perplexity gave itself poor marks in accuracy, so you might not trust it.

Anthropic Claude with Thinking and Web Search (logs)

Claude gave me the best justifications for its ratings, with a written explanation of each score that you can see in the logs.

Google Gemini Deep Research (logs)

Gemini chose some good categories, but spent a lot of its output reminding me that its numbers were speculative and that the field is changing quickly. I suggested the two categories of “speed” and “accuracy” in my prompt, and Gemini was the only one to buck that suggestion, using “insight”, “scope”, and “rigor” instead.

OpenAI Deep Research (logs)

ChatGPTs deep research mode is sooo sloooow. I did everything in one go, because it takes like 30 minutes to get results, and I don’t have that kind of time for a blog post. It also limits me to 10 queries each month. Despite how long it takes, its results are underwhelming; it doesn’t really explain its answers. It was also the only model to give 5 stars to anyone overall, awarding that honor to itself.

xAI Grok3 Deepsearch (logs)

Grok gave solid written explanations for its answers, and I thought its response was the best composed overall. Grok was the lowest rated overall, but I personally really loved the structure of its response and the way it incorporated links.

Winners

Overall, the most highly rated model is Perplexity, with an average rating of 4.08 stars out of 5. Good job Perplexity! My own experience matches this; it’s my preferred go-to.

I also wrote a script to implement Condorcet ranked-choice voting, which Perplexity also won!

The most humble model is Anthropic’s Claude, who gave itself a score of 3.8, compared to an average rating of 3.92 from all models. This fits my stereotype about Claude. Perplexity was also humble, rating itself a 4.0 compared to a 4.08 average. The other 3 models thought highly of themselves.

Grok was the most representative of the five; its overall ratings correlated strongly at 0.85 with the average of all five models. In some sense that makes it the most accurate, at least in this particular challenge.

I’m declaring Grok the most underrated model. It was poorly rated, but the text of its contribution to the contest was stellar.

Final Thoughts

I’m going to keep using Perplexity. It’s well liked by reviewers, and subjectively I like its interface. Claude stood out to me for the quality of their writing, so I’m likely to use it in cases where I want to use the resulting text directly. Grok was rated poorly, but I thought its analysis was surprisingly quite good. I’m not likely to use OpenAI’s entry, which is slow for middling results.

You might like this excellent comparison of deep research models, for a wordier analysis of what’s good and what’s not about these models.

Wow, I should look shit up before I start talking — me, after being wrong

very cool! As a perplexity and Google Deep Research user, these results check out to me. Sometimes Google Deep Research goes too indepth...like 20 pages, while i feel like perplexity goes into enough depth as the topic requires (in most cases).

the methodology notes was a great touch as well.